In 2026, the interaction between machines and visual information – known as computer vision – will only continue to develop rapidly. This trend is underscored by significant economic growth in the computer vision market. This is evidenced by a study commissioned by IBM, which showed that 59% of the surveyed enterprises are already working with artificial intelligence and intend to accelerate and increase their investment in this technology.

Next-generation object detection is transforming the automotive industry and manufacturing, while driving the development of “smart” stores and cities of the future. So what exactly is driving these technologies, and where is everything headed next?

Autonomous transportation in 2026

In 2026, the focus of autonomous systems has shifted significantly: while the key metric used to be the accuracy of object detection in individual frames, attention is now centered on the system’s ability to interpret how pedestrians behave, identify potentially dangerous objects, predict their future actions within a few seconds, and take appropriate actions in real-time.

Against this background, two fundamentally different approaches to autonomous driving have emerged, which determine the development of the industry:

- vision-only strategies that rely exclusively on cameras and neural networks, and rely on the scale of data;

- multi-sensor platforms that combine cameras, LiDAR, and radar, increasing reliability through sensor redundancy.

Tesla: betting on vision-only

One of the key trends in recent years has been Tesla’s strategy of abandoning expensive LiDAR sensors in favor of systems based exclusively on cameras and neural networks.

In 2025, Tesla expanded testing of its self-driving system in Austin, Texas, where cars participated in limited robotaxi trials, including tests without a driver in the cabin.

In 2026, the company designated this year as a “prove-it year”, a year in which Tesla must prove the viability of autonomous technologies on the road. Key initiatives include:

- scaling Full Self-Driving to new areas and cities;

- launching a fully autonomous robotaxi service;

- Series production of the Cybercab, a special vehicle without a steering wheel and pedals, optimized for robotaxis, is planned to begin around April 2026.

Waymo: expansion and new platform models

Waymo has adopted a strategy of deep sensor redundancy, combining cameras, radar, and LiDAR to ensure maximum accuracy of the perception system across various road conditions. This multi-sensor stack enables a more comprehensive perception of the environment around the car, particularly in situations with poor visibility, complex shadows, or cluttered scenes, where a camera alone may be insufficient.

One of the key manifestations of this strategy is the robotaxi “Ojai”, presented by Waymo Corporation at CES 2026. Formerly known as ZeekrRT, this autonomous minivan was developed in partnership with the Chinese manufacturer Zeekr and subsequently adapted by Waymo to utilize its own suite of sensors and algorithms. They integrate 13 cameras, 6 radars, and 4 LiDAR sensors, providing broad scene coverage, redundant data sources, and increased reliability of the perception module in real-world driving conditions.

Waymo is demonstrating rapid weekly expansion, reaching approximately 450,000 paid robotaxi trips per week in five US cities, including Atlanta, Austin, Los Angeles, Phoenix, and San Francisco, by the end of December 2025. This is almost double the number in April 2025, when the figure was about 250,000 trips per week.

Waymo has also officially set an ambitious goal for 2026 to reach one million paid robotaxi trips per week, more than quadrupling the current figures. The company plans to expand its presence to more than 20 cities in the US and international markets, including London and Tokyo, and intends to increase its fleet of autonomous vehicles to achieve this.

Humanoid robots in production

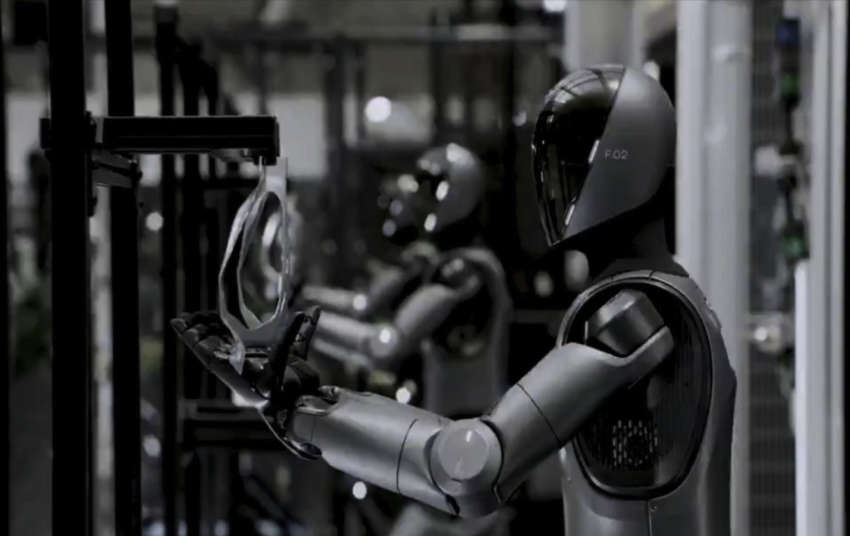

Figure AI, one of the industry’s leading companies, has successfully transitioned from laboratory prototypes to mass production with the introduction of Figure 03. This is the third generation of humanoids, where computer vision has evolved from being merely the “eyes” of the robot to becoming the foundation of its physical interaction with the world.

Here’s what makes these robots unique:

- Instant real-time perception

The updated vision system has received a doubled frame rate and minimal data transmission delay (latency). In a dynamic environment, such as a warehouse or kitchen, the new optics allow the robot to instantly react to a falling object or a sudden movement of a person, providing a reaction speed that exceeds that of a human.

- Solving the problem of “blind spots”

A classic problem in robotics is occlusion, when one’s own hand blocks the view of the cameras. Figure 03 features wide-angle cameras and tactile sensors in its palms, enabling it to “see with its hands” even in deep drawers or cluttered shelves, ensuring surgical precision in manipulation.

- Neuroarchitecture for VLA understanding

The unique multimodal Helix model of the Vision-Language-Action (VLA) class enables the robot to understand semantic context, such as phrases like “it’s fragile”, “it’s hot inside”, and “you need to grab the handle”. Helix enables learning new actions by simply observing a person.

The previous generation of Figure 02 humanoid robots was used in real-world conditions at the BMW Group Plant Spartanburg in South Carolina (USA). They worked in the body shop, performing tasks such as lifting and placing metal sheets on the production line. During the 11-month project, the robots worked 10-hour shifts, 5 days a week, loading more than 90,000 parts and contributing to the production of more than 30,000 BMW X3 cars. This project was one of the first such cases in serial production, providing Figure with valuable data for improving the next generation of robots – Figure 03.

Computer vision in retail

In early January, Amazon introduced an updated version of its smart Dash Cart. For a long time, the fresh fruit and vegetable departments have remained a “blind spot” for computer vision algorithms. Due to the high visual similarity of varieties, buyers had to manually enter PLU codes on the cart’s touchscreen, which slowed down the purchase process.

The new carts are equipped with high-dynamic range cameras and integrated scales. The CV system has learned to analyze the microtexture of the fruit skin. According to the stated characteristics, the accuracy of distinguishing apple varieties (for example, Fuji vs. Gala) reaches 99%.

The algorithm works on the principle of cross-validation (Sensor Fusion):

- Visual analysis: the camera captures specific patterns (stripes, color gradient, shape).

- Physical analysis: weight sensors instantly compare the object’s density to a reference database.

This allows customers to add fresh produce to their cart without any additional action – the system automatically adds the correct item to the check, even distinguishing between the degree of ripeness and whether it belongs to the “organic” category.

Foundation for next-generation systems

The emphasis in the development of computer vision systems has finally shifted from the architecture of neural networks to the quality of the data on which they are trained. In an era where algorithms are widely available, the purity and accuracy of training sets determine the market success of AI products.

In practice, this means moving from mere quantity of images to deep expertise. Consider a typical case handled by specialized companies: preparing data for autonomous vehicles in extreme weather. When a camera captures a pedestrian during heavy rain or in night fog, standard algorithms often “lose” the object’s boundaries. Therefore, specialists from teams like Keymakr manually refine every contour, combining human experience with smart automation tools. This allows for the creation of datasets where every object is labeled with maximum precision, despite visual noise.

To ensure the reliability of models, modern pipelines use a four-level validation system:

Level 1: Automated control and pre-processing. The first stage is executed by algorithms. The system automatically filters the input stream, eliminating corrupted files or duplicates.

Level 2: Verification by annotators. Verifiers correct inaccuracies, correct object contours in difficult lighting conditions, or remove erroneous processing.

Level 3: Audit by QA specialists. Specialists analyze data samples to ensure compliance with project standards and guidelines. They identify error patterns (for example, systematic inaccuracies in the marking of certain classes of objects) and unify approaches between different groups of annotators.

Level 4: Strategic project analytics. The final stage involves consolidating results at the project management level. A final check is carried out before sending the finished project to the client.

Future outlook

According to CNET’s analytics, 2026 marks a fundamental shift in AI development, with the dominance of large language models giving way to the era of so-called World Models. If previous years were devoted to teaching machines to generate text, now the industry is focusing on the ability of algorithms to deeply understand the physical nature of the world.

In particular, the “godmother of AI” Fei-Fei Li calls spatial intelligence the next great frontier of innovation, which will revolutionize robotics, storytelling, and scientific discovery. The global nature of this trend was also confirmed by Nvidia’s strategy, announced at CES 2026, where CEO Jensen Huang emphasized that “every single six months, a new model is emerging, and these models are getting smarter and smarter”.

The next stage of AI development, anticipated for 2026-2027, involves a deep understanding of the world, where algorithms can predict how objects will behave in real-world conditions.