Keymakr is a leading provider of AI training data for Computer Vision. They help at every stage of the pipeline from collecting, creating, or generating data, to its annotation and model output validation.

Interview with Maria Greicer, VP of Partnerships at Keymakr.

What purchasing advice do you have for clients in this field?

Maria Greicer: Let’s start with a quick overview of the field in general. We specialize in computer vision training data – visuals, videos, or 3D point clouds. Of course, there are other things like text, figures, and audio data, but we won’t focus on it here.

To find training data, you can either find a service provider or use free, open-source datasets. Open-source datasets are great for small projects or research, but for commercial products, you’ll usually need to create your own.

If you need a small amount of data, in-house annotation is feasible. However, if you require more than a few part-time workers to deal with your data, outsourcing is often more efficient. Outsourcing is suitable for projects of any size, as setting up complex processes and managing teams can quickly become cumbersome.

How do I know whether I need to start outsourcing my data processes?

M.G: Understand your needs first. For simple tasks with no security or privacy concerns, crowdsourcing platforms like Mechanical Turk can be a cost-effective option.

Let’s say you have 10,000 images and you need to place a bounding box without much precision or specific knowledge. Super simple task, so your number one option for cheap and simple is crowdsourcing

However, if you need quality, data protection, and fast turnaround times, a dedicated service provider is crucial. They’ll handle the entire project, from training a team to ensuring data quality and security.

What is important to look for in a service provider?

M.G: You go to a number of service providers, let’s say three or four, and give them the same dataset. It could be 300 images or five ten videos – you provide them annotation instructions and see how well they perform this task.

This is what we call a pilot, or a benchmarking study. Annotation results aren’t just about the labels themselves. You want to see how fast they respond and how well they understand the task. Here are some questions to ask:

- What is the correspondence like?

- How easy is it to get in touch?

- What questions are they asking?

- Do you get a dedicated project manager?

- Do you have to speak to a bunch of people to get things done?

Evaluate their responsiveness, understanding of the task, communication style, platform, turnaround time, and contract terms.

Important note: many companies put their best people on a pilot. So, you may get perfect datasets for your pilot, but then you’re locked into a contract with the provider and quality slips. Look for providers who prioritize consistent quality and offer flexible terms.

We don’t structure contracts this way, so it’s in our best interests to deliver the best quality data all the time. If we don’t deliver, you’re free to walk away and choose another service.

Comparison: what key features differentiate Keymakr on the market?

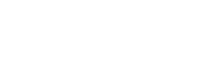

M.G: We have our own customizable annotation tool called Keylabs, ensuring full control and troubleshooting capabilities. This is an important advantage.

We’ve heard this story many times from our clients – their annotation tool was from one company and the service provider was another company. They didn’t communicate, so if the tool had issues, there was no fixing it.

This costs a lot of money for the client because you have a tool that they’re paying for and passing costs on you. It’s not efficient. So this is an important question: does the company have control over the tools they use?

Supported types of annotation are also relevant. For example, Keylabs supports all types of annotations – instant and semantic segmentation, skeletons, key points, bounding boxes, etc. We also do custom tagging where each object per frame or per image can have unique attributes.

Functionality: tell us about ease of use and how Keymakr’s services integrate with other solutions

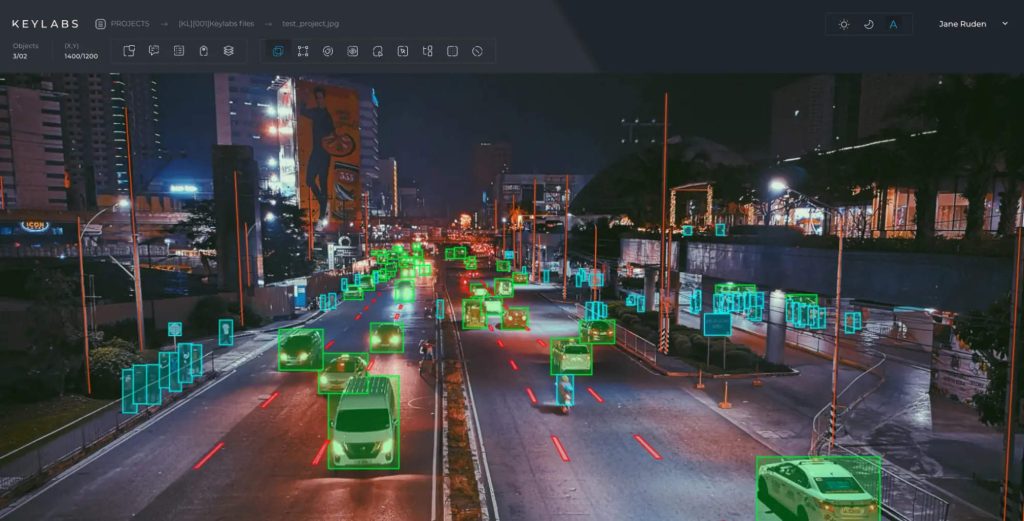

M.G: We create a user account on our platform where our clients can log in and see the results live. It’s a simple and user-friendly experience. You can also export all the data from Keylabs directly.

We work with any custom annotation pipeline and data sharing pipeline. So our clients will tell us how we want to set up data sharing protocols and we will do that for them. For example, If you have data in a specific S3 bucket, we would connect to this S3 bucket and download data from there, do the annotations, and then upload it to another S3 bucket that you need to. It can all be done automatically.

From a client perspective, it’s very easy. Just tell us: “take it from here, put it here when it’s completed.” We do all the work in the background ourselves.

Quality: tell us something about the quality behind your products / solutions.

M.G: At Keymakr, we have four levels of quality control embedded in our tools and processes to ensure accuracy and address any concerns.

Our QA team checks every project and confirms objects. Additional checks are done by team leads and team members. So, there’s a lot of QA and verification for every task class. We got here after years of experience in managing annotation teams. It’s baked into our tools and systems.

For example, in Keylabs we can see all the tasks done by every operator to filter and assess. Let’s say it’s a team of 10 people, and suddenly the QA team finds a lot of mistakes. We can determine whether it’s a global problem for the team, where they all need to be brought up to speed, or if a specific operator needs retraining on a specific task.

Price: tell us something about the costs from a ROI perspective?

M.G: A professional company can achieve 10x the output of in-house annotation without established processes. Factors contributing to ROI include:

- Access to the best tools and expertise

- Lower labor costs in certain regions

- Time savings through assisted annotation and automation

- Scalability and faster team building for large projects

You don’t need to look for tools or train your team on using those tools. So it’s already there, reducing onboarding time.

Another thing to note is that the right tools save you even more. One big example is assisted annotation. We would do this evaluation for you: check where automatic annotation or partly automatic annotation is applicable. Instead of spending hours on a video, you can bring it down to minutes and focus on validating results instead.

Because we have an established process, building larger teams is faster for us. For example, you need 10,000 annotation hours, or let’s say twenty thousand videos to process within two weeks. It’s doable. We can support that by allocating a larger team size to a specific project.

Feedback: what was the feedback from clients about your products / solutions?

M.G: Many of our happiest clients worked with other companies and then came to us. They have something to compare us to and understand the quality of our outputs.

Some stories we heard go something like this – they gave a complex task to a service provider without doing much diligence. The company didn’t really understand it. They deliver low-quality data that hurts the model. Mistakes pile up, redoes mount. At that point you get months and months of delays in the development pipeline of your machine learning model.

So, you’re late on the market – in particularly bad cases, late for a year or two. Your deployment schedule is off and the product doesn’t work because the annotation company didn’t deliver. However, that’s insignificant compared to the opportunity costs. All the other teams involved, everyone who works on the product got paid and wasted a year to work on something that didn’t get to the market in time.

Sometimes it’s worse to go with the cheaper option. We’re more on the expensive side, but don’t just think about it as dollars per hour, more like, how much time you’re saving on time-to-value, and how reliable the service provider really is.

Do your research, compare options, and find the best fit for your needs to save time and money in the long run.

Maria Greicer is the VP of Partnerships at Keymakr. She has 16+ years of experience as a leader and innovation partner in tech startups. She’s passionate about helping data-driven projects come to life and runs her own podcast ‘It’s All About Data.’